This electronic glove gets a grip on human touch

A glove studded with a network of sensors can identify objects through touch alone. It might be a big step toward designing better prosthetics.

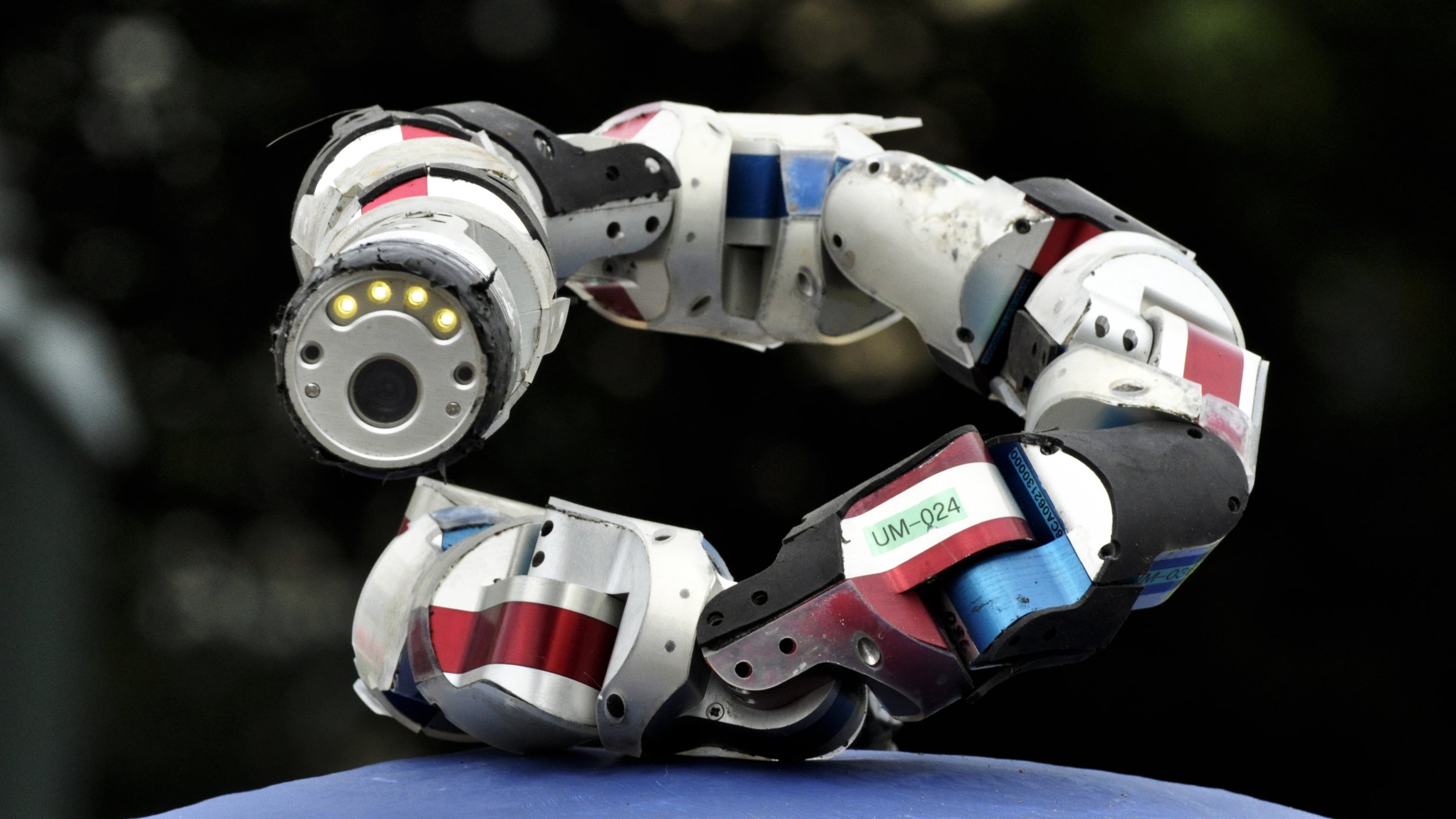

A low-cost glove for artificial touch, equipped with 548 pressure sensors. Image Credit: Courtesy of Subramanian Sundaram, MIT

Even blindfolded, most people could probably tell if someone tossed a football into their lap.

A quick brush of the fingertips would expose the football’s oblong shape and trademark laces; a gentle squeeze would reveal its inflated interior and dimpled, leathery texture. Especially sensitive hands might even pick out where the ball’s seams came together, or the subtle, embossed lettering spelling out “Wilson” on its flank.

Even when our other senses fail, the tactile information fed to our brains remains a powerful tool to navigate our surroundings. It’s hard to imagine life—or approximations thereof—without it.

But in spite of the ubiquity of touch, researchers still don’t have a good grasp on how to replicate these processes in machines. Though today’s robots have found homes in hospitals and on search and rescue teams, they lack the sensitivity and dexterity required to handle everyday objects. And while many recent advances have equipped robots with sophisticated tools to crunch visual data from their surroundings, the same can’t be said for intel gleaned from touch.

Now, a team of researchers from MIT has unveiled an invention that may finally be capturing a bit of that much-needed human touch: an electronic glove equipped with nearly 550 pressure sensors that, when worn, can learn to identify individual objects and estimate their weights through tactile information alone.

In its current iteration, the glove captures only pressure—just one of many types of tactile information human hands are sensitive to—and isn’t yet ready for applications outside the laboratory. But the product is easy and economical to manufacture, carrying a wallet-friendly price tag of only $10 per glove, and could someday inform the design of prosthetics, surgical tools, and more.

“The technology that these authors developed is really impressive…especially the number of sensors they were able to integrate,” says Sheila Russo, a roboticist and engineer at Boston University who was not involved in the study. “[The glove] has a lot of potential for different applications in robotics, but also for studying how the human hand actually behaves.”

Much of what researchers have learned about human movement and touch comes, unsurprisingly, from simply watching people move and touch objects. But when push quite literally comes to shove, visual information can only go so far. The moment something is touched, a subtle, complex dialogue is initiated between hand and brain, enabling us to respond in real time to an object’s weight, temperature, texture, and more.

To capture a slice of this sensory conversation, study author Subramanian Sundaram, a roboticist and engineer at MIT, built a glove to mimic the “hardware” of the human hand. In an approximation of the millions of microscopic receptors speckle the surface of our skin, Sundaram and his team constructed a flexible, knitted glove outfitted with a tapestry of 64 electrically conductive threads. Each of the 548 points at which the threads overlapped created a sensor that could register when force was applied, allowing the researchers to generate an intricate series of pressure maps that monitored what the entire glove “felt” in real time.

The researchers next donned the glove and interacted with 26 objects with a range of weights, textures, and sizes for three to five minutes at a time. Most of the props—a spoon, a stapler, scissors, a mug—were commonplace. Others, like a heavy stone cat and a horned melon, were a bit more idiosyncratic.

Sundaram and his colleagues then fed their newly acquired repository of data to a deep learning algorithm that, once trained, attempted to identify the objects through measurements taken during a second round of exploration. Though the system had an easier time distinguishing objects on the heavier end of the spectrum, like a tea box and the stone cat, its overall accuracy in the trial was about 75 percent. In a second set of tests, the researchers showed a separate glove-algorithm combo could predict the weights of objects it hadn’t yet encountered.

“This is really an impressive feat,” says Oluwaseun Araromi, a roboticist and mechanical engineer at Harvard University who was not involved in the study. “Getting such a large array of sensing data...really increases the resolution. This could help us understand how the brain is using [tactile] information to provide the kind of feedback it’s giving us.”

As the system learned, it even started to pick up on features related to the contours of certain objects, like the spikes of the horned melon, or the edges of a stapler. This kind of elementary pattern recognition, Sundaram says, echoes some of what’s known of the hand-brain interface in humans—though, for now, the glove can’t capture other contextual clues, such as an object’s temperature or pliancy.

Incorporating other sensors that can capture this information will be a key next step, Sundaram says. Ideally, future versions of the glove, which is currently tethered by the cables of its conductive threads, will also be wireless and slightly less cumbersome.

As development on the glove progresses, though, the list of potential applications is only growing. Wearable sensing devices would likely have markets in personal health care and sports. Similar technology could also aid medical robots or devices that need to perform tricky procedures—for instance, by mapping out the amount of force that needs to be applied to more delicate tissues, Russo says. Additionally, a system that collects and processes tactile information could enhance rehabilitation or assistance devices for stroke patients, or others who have suffered damage to their sense of touch, Araromi says.

The glove interacting with a horned melon (kiwano). Image Credit: Courtesy of Subramanian Sundaram, MIT

Further off in the future, high-tech tactile sensing could even enable prosthetic and robotic hands to approach the subtle control the human body effortlessly achieves. These kinds of applications are probably some of the furthest off, Sundaram says, but data collected by the glove might already hint at possible design shortcuts. In a final analysis, the team mapped out which parts of the hand were most likely to be used together—such as the tips of the thumb and index finger, which often pair up to grasp objects. Zeroing in on these anatomical partnerships could help prosthetics engineers strategically place pressure sensors where they’re most needed, Sundaram says.

Regardless of what’s next for the glove, Sundaram hopes the fact that it’s cheap and easily built will make it a widely used tool. That kind of ubiquity, he says, will help generate the volume and variety of data that’s needed to better understand touch in humans and machines alike.

“We wanted to make something that a lot of people could use,” he says. “It’s not only about sharing know-how...Part of our success [hinges on] how well we can get other people to use this device, too.”